edocs Home > BEA AquaLogic Data Services Platform 3.0/3.2 Documentation > ALDSP 3.2 New Features Documentation

| Original author | Michael Reiche |

| Documentation |

John Jerney |

| Version(s) supported |

ALDSP 3.2 / 3.01 |

Best Practices When Building Data Services

This topic introduces a series of best practices you can consider when building data services using ALDSP.

Topic Map

- How ALDSP Dataspaces Work

- ALDSP Development Best Practices

- Performance and Optimization Best Practices

- Database Access

- Exercising Care when Using Fail-over, Fail-over-retry, and Timeout

- Using Inverse Functions

- Using Caching and Auditing

- Query Plans

- Monitoring Operational Performance and Service Level Agreements

- How To Get More Help

- Related Topics

How ALDSP Dataspaces Work

This section provides an overview of the ALDSP client-server architecture and provides a basic introduction to how ALDSP dataspaces work.

Overview

The ALDSP runtime is hosted inside a WebLogic server container and can co-exist with other server platforms such as AquaLogic Service Bus 3.0 (ALDSP 3.2 and higher), WebLogic Integration (WLI) or WebLogic Portal. Clients access the ALDSP data services through the Data Service Mediator API, Web Services API, the ALDSP JDBC driver, and the Service Bus ALDSP Transport. BEA Workshop-based applications can access ALDSP data services through an ALDSP Control.

ALDSP was one of the first products to introduce the concept of dataspaces. A dataspace is a unit of deployment, administration, and security policy control. A single ALDSP runtime environment can host one or more dataspaces. A dataspace contains a set of related data services. Data services hosted in a dataspace run in the same context and can be reused from other data services.

Understanding the ALDSP Server

When the ALDSP server receives a request, it does the following:

- The ALDSP server looks up the data service by namespace, and the operation by function name and number of arguments.

- The server looks in the query plan cache for a compiled query plan. If the server does not find one, it compiles the query and caches the resulting query plan. (The query plan cache uses a "most-recently-used" algorithm, with a default size of 100 plans.)

- After the server has a query plan, it binds the operation's arguments to the plan.

- The server runs the query and streams the data from the back-end systems through the ALDSP server.

There are cases, however, that require full materialization of the data inside the ALDSP server, such as during an in-memory sort operation run in the ALDSP server middle tier. For these types of operations, the server can optionally use secondary storage (disk) as needed so as not to exhaust the virtual memory of the JVM. Here are some specifics: - If the operation is initiated using the data service mediator API or the ALDSP JDBC driver, the query result can be streamed all the way to the client as the client iterates through the results.

- If the operation is initiated using the Web Service client API or DSP transport, the results are streamed through the server but fully materialized inside the server before sending the results to the client.

Understanding the ALDSP Client

For detailed information about ALDSP clients, refer to Introducing Data Services for Client Applications in the Client Application Developer's Guide.

ALDSP Development Best Practices

ALDSP, similar to other advanced development systems, offers a powerful and flexible environment for creating high performance and feature-rich programs. This section provides a series of best practice guidelines for organizing and structuring your projects.

| Best Practice | Overview |

|---|---|

| Organize using dataspace projects |

You can reduce build and deployment times by organizing your data services into projects. |

| Build data services in layers |

Building projects using a layered approach can simplify development and increase reliability. |

Organizing Data Services Using Projects

ALDSP treats all artifacts within a dataspace project as being within the same context. This means that you are free to organize projects in any way that makes logical sense. For example, you could choose to create a single project with 100 data services, or 10 projects with 10 data services each.

In practical terms, however, there are advantages to organizing in specific ways. For example, Studio builds a project at a time. Therefore Studio can typically build a project containing fewer data services faster than one containing a larger number of data services.

|

In general, you should consider organizing data services into projects if the data services (across the projects) do not need to participate in building views. This means, for example, grouping logically-related data services in one project and non-related services in another project, and so on. |

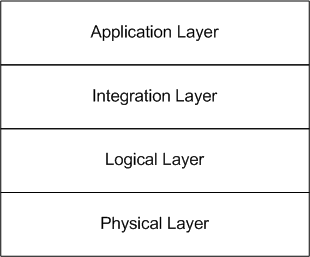

Building Data Services in Layers

ALDSP enables you to design and build your data services as a series of layers extending from the physical data sources to your business and application logic. This section provides an overview of the most common layers used in ALDSP and offers suggestions on the types of operations that you should include in each layer.

ALDSP Layers

The following table provides an overview of the layers in a typical ALDSP dataspace project:

| Layer | Overview |

|---|---|

| Physical |

Contains imported operations related to resources such as databases, Web services, XML files, and Java functions. |

| Logical |

Contains operations matching the physical layer operations that perform simple transformations to the data. |

| Integration |

Contains the coarse-grained business objects that are manipulated by the dataspace project. |

| Application |

Can add a further layer of abstraction and specialization to a data service project. |

Physical Layer

The physical layer contains imported operations related to resources such as databases, Web services, XML files, and Java functions.

To create the physical layer, do the following:

- At the top-level of the dataspace project, create a folder named Physical.

- Create a subdirectory for each physical resource in the Physical folder.

- Import the operations for each resource into the respective folders.

You typically should not modify these physical data services. This makes it easier to re-import changes to the physical resources, if necessary.

Logical Layer

The logical layer contains operations matching the physical layer operations that perform simple transformations to the data.

To create the logical layer, do the following:

- At the top-level of the dataspace project, create a folder named Logical.

- Mirror the directory structure from the Physical folder in the Logical folder. Create a corresponding data service in the Logical folder for each data service in the Physical folder.

- Create a schema for each data service.

- For each data service in the Logical folder, create a new operation that calls the corresponding physical operation.

Assign meaningful names to elements. For example, CUST_ID might become CustomerId. You can also add computation and other logic to operations in the logical layer, for example, LoanDuration = $LOAN/END_DATE - $LOAN/START_DATE.

You can also use the logical layer to define inverse functions, as required. Ideally, this is the only layer that should have expanded mappings, although the application layer can also use expanded element mappings to retrieve required elements.

The logical layer typically does not include significant joins or selections. The layer instead focuses on data access and element construction. Therefore the resulting query plans will simply be calls to the physical source along with element construction.

This means that there is typically little opportunity to improve query efficiency in the logical layer, so it normally does not help to examine the query plans at this layer.

The following shows a sample operation in the logical layer:

function getCustomer() {

for $c in CUSTOMER()

return

<Customer>

<CustomerId>{$c/CUSTOMER_ID}</CustomerId>

<LastName>{$c/LAST_NAME}</ LastName >

<FirstName>{$c/FIRST_NAME}</FirstName>

<Email>{$c/EMAIL}</Email>

<CustomerSince>{$c/CUSTOMER_SINCE}</CustomerSince>

<SupportLevel>{$c/SUPPORT_LEVEL}</SupportLevel>

</Customer>

}

Integration Layer

The integration layer contains the coarse-grained business objects that are manipulated by the dataspace project.

To create the integration layer, do the following:

- At the top-level of the dataspace project, create a folder named Integration.

- Create a folder in the Integration directory for each group of coarse-grained business objects you plan to create.

You might, for example, have folders named Ordering, Customer, and Product.

The integration layer is where you should perform data integration, joining, for instance Customer, Order, and LineItemto create the coarse-grained XML structure CustomerOrderLineItem. - Create a data service for each coarse-grained business object.

If possible, also create a 'get all' base function in the data service (such as CustomerOrderLineItem) that constructs an element by joining elements such as Customer >Order > LineItem.

Do not put selection operations, such as 'by CustomerId' for example, in this 'get all' function. Also, operations in the integration layer should only call operations in the logical layer.

If possible, design data services in the integration layer to only use compound mappings, since expanded mappings are expensive in terms of time and memory. The example below shows how to construct nested output using compound mappings only. Note the introduction of envelope elements. The LineItemEnvelope element is not required since it is at the bottom; it is included for consistency.

function getCustomers(){

for $c in Customer()

return

<CustomerEnvelope>

{$c}

{

for $o in Order()

where $o/CustomerId eq $c/CustomerId

return

<OrderEnvelope>

{$o}

{

for $l in LineItem()

where $l/OrderId eq $o/OrderId

return

<LineItemEnvelope>

{$l}

</LineItemEnvelope>

}

</OrderEnvelope>

}

</CustomerEnvelope>

}

After you have created the base function, you can write specific selection functions using the base function. For example:

function getCustomerById( $customerId as xs:string) {

for $c in getCustomers()

where $c/CustomerId eq $customerId

return

$c

}

function getCustomersByLastName( $LastName as xs:string) {

for $c in getCustomers()

where $c/LastName eq $LastName

return

$c

}

function getCustomersByOrderAmountExceeding( $orderAmount as xs:decimal) {

for $c in getCustomers()

where some $o in $c/OrderEnvelope/Order

satisfies $o/OrderAmount > $orderAmount

return

$c

}

Since the integration layer contains joins and possibly selections, there is the possibility of inefficient query plans. As you create each function, examine the resulting query plan and evaluate its efficiency.

In some cases, it may even be useful to examine the query plan as you develop each function. For instance, when creating the base operation, getCustomers(), consider examining the query plan after you add the join on Orders, but before you add the join on LineItems.

If adding the join Customer > Order produces an inefficient query plan, there is little point in continuing to complicate the query by also joining to LineItem before correcting the join on Customer > Order.

Application Layer

For simple dataspace projects, three layers will likely be sufficient. However, if your dataspace has more demanding requirements, you can add an application layer.

The application layer adds a further layer of abstraction and specialization to a data service project. For instance, you could add row or column-level security in the application layer, or only expose required columns to reduce unnecessary data retrieval. Similarly, you could use the application layer to create a highly-specialized data service to fulfill a specific need.

The structure of the application layer resembles the integration layer, and makes calls directly to the integration layer.

The benefit of these layers is that once they are created, you will have constructed a virtual database around all your disparate data. You will be able to create and modify data services that are only loosely coupled with the underlying physical data. Changes in the underlying data will be much less likely to affect your data service developers both in term of data service development and maintenance.

And your application developers will be able to write to layer that is highly insulated and far more powerful as compared to writing to separate data sources and processing the results locally (perhaps in yet another database).

Performance and Optimization Best Practices

ALDSP attempts to optimize the operation of your dataspace project as well as interactions between your dataspace and the underlying data sources. This section describes a series of best practices that you can use to potentially increase the levels of optimization and boost the performance of your dataspace projects.

| Category | Best Practice |

|---|---|

| Database Access |

|

| Fail-Over | Exercise care when using fail-over, fail-over-retry, and timeout |

| Inverse Functions |

Use inverse functions |

| Caching/Auditing | Take advantage of caching |

| Query Plans |

|

| Performance Monitoring |

Evaluate and monitor operational performance |

Database Access

This section describes performance and optimization best practices related to data access.

Retrieving Only the Necessary Data

Examine the query plan and verify that the SQL statements retrieve only the necessary data. For example, suppose you have the following selection in your XQuery:

where $c/POSTAL_CODE eq $postalCode

You should then find that condition in the SQL statements as:

WHERE POSTAL_CODE = ?

If this clause does not appear in the SQL, you need to determine why this is the case to enable the code generated by ALDSP to be pushed to the database.

Designing Functions Which can be Pushed to the Database

To have a function pushed to the database, and thereby increase efficiency and performance, the function must have an equivalent database operation or an inverse operation. For example, suppose you have the following selection in your XQuery:

where match($c/LAST_NAME, $regexpr)

ALDSP cannot push this selection to the database because there is no equivalent to the match() function in SQL, and there is no inverse function for match() defined in ALDSP.

|

In most cases, your XQuery functions will test for simple equality and inequality. ALDSP can automatically push these types of operators to the database. |

Verifying that Joins are Implemented as Left-Outer or Inner/Natural Joins

You need to consider how joins are implements in your XQuery. For example, suppose you have a join in your XQuery similar to the following:

where $c/CUSTOMER_ID eq $o/CUSTOMER_ID

If both tables are in the same database and the returned XML is nested, the query plan should contain SQL with a left-outer-join of these tables. If the returned XML is flat, the query plan should include SQL with a inner/natural join of the two tables.

If the join is not implemented as either a left-outer or inner/natural join in the SQL, you need to determine why. Similarly, you need to examine options for ensuring that the joins are pushed to the database.

Pushing Left Outer Joins to the Database

The following conditions need to be satisfied for a join producing nested output to be pushed to the database as a left-outer join:

- Both tables need to be in the same database.

- The driving (left) table must have a unique key.

The second condition is necessary because the result set from SQL will contain duplicate rows, and ALDSP needs to detect these rows so that the right-hand side can be grouped by the left-hand side key. If the left table does not have a unique key, ALDSP is forced to get the records from the left table only, generate a key, retrieve corresponding rows from the right table, and join them with those from the left. If this occurs, you will find two SQL statements, one for each table, joined using an index-cpp join and a generate-key() operator above the left table.

If the conditions are met, there is no limit to the number of levels of nested output that can be joined in a single SQL statement. However, if within the nesting, there are sibling child elements, the second child cannot be in the join. For example, Customers > Orders > LineItems > Products > Pricings can be pushed as a single SQL statement with multiple left-outer-joins. But in Customers > (Orders, Addresses), Customers > Orders can be pushed as a left-outer join, but Addresses will be implemented as a clustered, parameter-passing join (cpp).

The reason that Address is not joined as another left-outer join in the same SQL is because adding it would produce a cross-product between Orders and Addresses as they are not dependent on each other. So, if a Customer had 1,000 orders and 1,000 addresses, the resulting SQL would return 1,000,000 rows and ALDSP would be left to remove the duplicates.

By splitting this into two separate SQL statements, the first would retrieve 1,000 rows and the second would retrieve another 1,000 rows (total 2,000). In addition, the reason that Address is not retrieved in the same SQL statement through a union is that this would disrupt the streamability of the query, and ALDSP would need to read all of the Customer > Order rows before it got to the first Customer > Address row.

Although this would not be a problem for small results, ALDSP is unaware of the result set size and, whenever possible, prepares a query plan that can handle results of any size.

Using a ppci-impl Join

When ALDSP cannot push the join of two tables to the database, the system implements the join as a parameter-passing, clustered-indexed join. Specifically, consider a JDBC dataspace project that needs to retrieve the list of customers and their associated orders. You could use code similar to the following:

=// will need stmt2 later stmt2=conn.prepareStatement("select * from ORDER where CUSTOMER_ID = ?"); stmt1= conn.prepareStatement("select * from CUSTOMER where ZIPCODE=?"); stmt1.setString(1, zipcode); rs1 = stmt1.executeQuery(); while (rs1.next() ){ System.out.println("customer :"+rs1.getString("CUSTOMER_ID")); stmt2.setString(1, rs1.getString("CUSTOMER_ID")); // set parameter rs2=stmt2.executeQuery(); while( rs2.next() ) { System.out.println(" order :"+rs2.getString("ORDER_ID")); } }=

This uses a parameter-passing join (the parameter is CUSTOMER_ID). If, however, you have a lengthy list of customers from the first SQL statement, you will need to call the second SQL statement quite a few times. One thing you could do to reduce the number of calls would be to fetch orders from several customers at a time. Once you have the orders for several customers, you can manually join the rows with the customers.

This is exactly what ALDSP does automatically for you! So, if you have a join that can not be pushed as a single statement, ALDSP performs a batched (clustered) parameter-passing join. The first SQL statement would therefore be as follows:

select ... from CUSTOMER ...

Similarly, the second statement would be as follows:

select ... from ORDER where CUSTOMER_ID = ? or CUSTOMER_ID = ? ....

You may wonder why there are 20 arguments. Why not use the exact number of customers, for example? The answer is that there are limits to the number of arguments that you can pass to an SQL statement.

But, more importantly, database performance quickly degrades as more arguments are passed (at some point, a full-table scan would be a good idea if there are many lookups to perform). That's the first reason why there are 20 arguments.

Second, if you were to specify the exact number of arguments, you would need to create a separate SQL statement (and PreparedStatement) in the worst case, for up to 20 arguments resulting in 20 times the number of PreparedStatements that you really need.

In addition, since the database needs to compile PreparedStatements not encountered earlier, this would require 20 times the number of compilations. In some cases, this could even cause statements to be discarded from the cache, resulting in even more compiling.

|

When a JDBC connection pool maintains a cached PreparedStatement, it uses resources (cursors) in the database. This is a further motivation to keep the number of PreparedStatements to a minimum. |

The final point to consider is what happens when the number of customers is not an exact multiple of 20? In this case, the final argument is repeated to fill in the remaining places since the logical operation CUSTOMER='ZZZ' OR CUSTOMER='ZZZ' is the same as CUSTOMER='ZZZ'. This is a very simple optimization for a database and testing has shown that it does not significantly impact performance.

Note that ppci-join seems like a lot of effort simply to eliminate a few database round trips. If the join is on an indexed column, there will be 20 indexed lookups independent of whether you make one call with 20 parameters, or make 20 calls with one parameter.

While this is the case, if the join is on an unindexed column, one call with 20 parameters takes one full table scan and 20 calls with one parameter takes 20 full table scans. Obviously you may need a noticeable performance difference between these two approaches.

Avoiding Casting

You should generally avoid casting data, especially casting that cannot be performed by the database.

The reason for this is as follows: If there is a cast from one type to another for the purpose of a join or a selection, and that cast cannot be handled by the database, ALDSP is forced to retrieve all the rows and perform the cast itself, followed by the join or selection.

You can determine if the casting cannot be performed by the database by examining the cast operators in the query plan.

Optimizing Ad Hoc Queries

Clients can submit ad hoc queries, which are essentially data service functions that exist only while the queries are run. From the engine's perspective, ad hoc queries go through the same life-cycle as regular queries.

ALDSP caches the query plan from an ad hoc query, just as it does with a query plan for a data service function. If the same ad hoc query is run again, the cached query plan is used.

If you are relatively certain that the same ad hoc query will not be requested a second time, you can have the client application instruct ALDSP not to cache the ad hoc query plan using the following request config attribute:

DO_NOT_CACHE_QUERY_PLAN

However, use of this directive is generally not needed. (Note that using the ALDSP Filter API generates an ad-hoc query based on the filter.)

You can use the ALDSP Query Plan view to display the query plan and present hints regarding operators that may not be optimal. ALDSP also supplies audit information that shows additional details of the query run. Both of these tools are helpful for developing ALDSP dataspace projects.

Writing Your Own SQL for ALDSP to Use

You should only consider writing your own SQL statements for ALDSP to use when it's easier than letting ALDSP generate the SQL statements.

However, keep the following in mind if you choose to write your own SQL:

- It gets more difficult as the queries become more complex.

- ALDSP is limited in the optimizations that it can perform with SQL that you have written.

- ALDSP cannot use your SQL statements with a ppci-impl join.

- ALDSP cannot use your SQL statements containing a left-outer join to implement nested elements.

Note that ALDSP attempts to push all access to the same database into a single SQL statement, with the following exceptions:

- ALDSP does not push unions to the database because this does not offer a significant improvement and forces the system to group the retrieved rows in the query engine. It can also break streamability.

- ALDSP does not push cross-products to the database because a cross product with 1,000 rows on the left and 1,000 rows on the right results in 1,000,000 rows (instead of 1,000 + 1,000).

- ALDSP does not push a left-outer join to the database in cases when the left-hand side does not have a unique key. For example, ALDSP does not push the following:

A left-outer-join B on A.x=B.x left-outer-join C on A.y = C.y

This results in a cross-product as B and C are not related.

Exercising Care when Using Fail-over, Fail-over-retry, and Timeout

The BEA XQuery fail-over function is an XQuery extension that enables you to catch unexpected, unavoidable exceptions, such as a database or a Web service being unavailable.

To appreciate the advantage of using the fail-over function, consider the example of a Web service that is overloaded to the point that calling it blocks for five minutes and then fails. If an XQuery calls this Web service 100 times, it could take up to 500 minutes to complete the query.

The fail-over mechanism provides a solution in that once the primary expression fails, the assumption is that it will continue to fail for some time, so there is no need to re-evaluate within the same query execution.

Notwithstanding this feature, however, you should exercise care in using the fail-over, fail-over-retry, and timeout functions. This is because when you specify that a certain portion of the XQuery is to fail-over, the compiler and optimizer does exactly that and cannot combine expressions outside the fail-over with expressions inside the fail-over.

For example, suppose that you are retrieving a database table using the CUSTOMER() function, but want it to fail-over if the database is not available. You could use the following expression:

for $CUSTOMER in fail-over( CUSTOMER_in_db_1(), CUSTOMER_in_db_2())

Consider further that in a higher-level function, you select from that result using the following clause:

where $CUSTOMER/CUSTOMER_ID = $CustId

Because of the fail-over, ALDSP is forced to read the entire CUSTOMER table and then, in memory, select the one customer that you need.

Note that use of Java functions put similar restrictions on the ALDSP compiler and optimizer, since it cannot examine the Java functions (which are opaque to ALDSP). This can also thwart optimizations.

One solution is to move Java function logic into ALDSP operations. If this is not possible, and you find that the Java functions are blocking optimizations, you might explore using inverse functions.

Using Inverse Functions

ALDSP enables data service developers to register inverse functions with the system, enabling you to define and use general user-defined data transformations without sacrificing query pushdown and updateability. Using this information, ALDSP is able to perform a reverse transformation of the data when analyzing query predicates or attempting to decompose updates into underlying data source updates.

Consider the following example: You have a column called dateTimes in a database, defined as the number of milliseconds since January 1, 1970. You then declare a function called millisToDateTime() which converts the millisecond values to dateTime enabling you to query on that column and retrieve a dateTime that you can display as part of the query output.

Now suppose that you have a dateTime value in XQuery, either from a query argument or from another data source, and you want to retrieve all the rows that match the dateTime from the database table. The only obvious solution is to retrieve every row from the table, convert the milliseconds to dateTime, and then compare it to the dateTime value in the XQuery (discarding all rows that do not match).

This is very inefficient. It would be better to convert the dateTime to milliseconds, and push that selection to the database. You could write a dateTimeToMillis() function and use the output to select directly on the milliseconds column. But there are a couple problems with this approach.

First, you would need to expose the milliseconds column to the outside world. And second, you would need to know information about the milliseconds column to use the dateTimeToMillis() function.

A much more elegant solution is to define an inverse function for the millisToDateTime() function that, given the output of millisToDateTime(), returns the input argument. Once defined, ALDSP can run the dateTime value through the inverse function to obtain the correct value in milliseconds to push to the database.

Since ALDSP can determine that the milliseconds value from the database was sent through millisToDateTime(), it knows that applying the inverse function to a dateTime will return a value suitable for the database.

| Using Inverse Functions to Improve Query Performance |

Using Caching and Auditing

Caching or auditing a specific function requires that the function call remain in the query plan. This means that if you cache a function such as getOrders($customer), the optimizer cannot combine the function getCustomers() with getOrders($customer) to produce the following SQL statement:

select ... from CUSTOMER C, ORDER O where C.CID eq O.CID

Instead, the optimizer has to leave the getOrders($customer) function as the following:

select ... from ORDER O where O.CID = ?

Another consideration may be less obvious. Suppose you have a function getCustomerCreditScore($cid) that retrieves the credit score from the getAllCustomerInfo($cid) function. If the getAllCustomerInfo($cid) function is not cached, then the resulting query plan needs to access only the database tables necessary to get the credit score.

However, if the getAllCustomerInfo($cid) function is cached, then the optimizer cannot determine exactly the data from getAllCustomerInfo($cid) that may be required by a different function that calls getAllCustomerInfo($cid).

This means that ALDSP will have no choice but to retrieve and cache everything. In the case that the getAllCustomerInfo($cid) function retrieves the customer profile, orders, customer support cases, credit information, and so on, and all you need is the credit score, you would be forced to retrieve (and cache) an excessive amount of data just to get the credit score.

Query Plans

This section describes performance and optimization best practices related to query plans.

Evaluating Performance Before Running the Query

You should consider examining the query plan for an operation and evaluating its expected performance before running the query. This is useful because, in some cases, the operation may run endlessly as a result of an infinite loop.

Precompiling Query Plans

Precompiling query plans can increase performance, especially since the initial compile time can sometimes be significant. However, this is not always the case.

Instead of immediately precompiling your query plans, examine the plan to see if you can reduce the compile time in other ways. For example, using compound mappings can often help.

| Post to the Oracle Data Integration forum if you would like to be forwarded a startup class that precompiles query plans. |

Evaluating the Performance by Running the Query

Ultimately, the best way to evaluate the performance of an operation is to run the query and examine the results.

To evaluate performance, do the following:

- Turn on auditing.

Do not enable auditing of individual functions; this changes the query plan. - Run the query and examine the audit information.

- If the query does not finish running, check the query plan. Look at the individual SQL execution times. If you see simple SQL statements that take excessively long to run, check that you have the appropriate indexes defined.

- Run the SQL through 'explain plan' and check whether the SQL is retrieving more rows that needed.

- Finally, verify that the selections and joins are getting pushed to the database.

Monitoring Operational Performance and Service Level Agreements

For information about monitoring operational performance and Service Level Agreements (SLA), refer to the audit and profiler samples installed with ALDSP 3.2 and 3.01.

|

The listener sample sample writes audit records to a database and produces the necessary reports.

How To Get More Help

For additional help and further suggestions for dataspace optimization, post to Post to the Oracle Data Integration forum.Customers can also open cases with Oracle Customer Support, as required.